(Inexpensive) Highly Available Storage Systems

The internet has pampered our users by giving them 99.9% uptime. Now every corporation needs to have similar availability to 99.9%. One part of high availability solution is the storage. In the good old proven corner we have SAN and NAS storage solutions. They are not always highly available (you must ensure the system has more than one controller, in case one controller is broken, and RAID 5 is nowhere enough these days, choose RAID 6, and dont forget network rendundancy), but they are almost always expensive. In the era where IT Directors forced to think 'cost transformation', we always welcome cost-saving alternatives.

New hardware developments influenced our infrastructure options, lets write the factors down:- abundance of cheap gigabit ethernet card & router -> this allow us to forget expensive fiber connetivity, and allow us to leverage newly developed distributed systems.

- cheap large SATA drives -> large commodity storage for the masses

Development of Infrastructure support software also influenced in giving more options :

- OpenSCSI iSCSI target -> converting our SATA drives into shared storage

- CLVM -> clustered LVM allows distributed volume management

- OCFS2 -> Oracle Clustered Filesystem is an open sourced cluster file system developed by Oracle

- Ceph RADOS massive cluster storage technology

- Amazon Elastic Block Storage -> we need to run our servers in AWS to use these

- DRBD -> Distributed Replicated Block Device driver, allows us to choose between synchronous mirroring and asynchronous mirroring between disks attached to different hosts

A Question in Serverfault asks about mature high availability storage systems, summarizing the answers :

- The surprising fact is that the oracle-developed OCFS 2 is not the tool of choice because needs downtime to add a capacity to the cluster filesystem

- Ceph RADOS is promising newcomer in the arena, giving Google File System like characteristics with standard block interface (RBD). Old enterprises usually hesitate to use such new technology.

- The ultimate choice of sys admins is HA NFS (highly available NFS).

HA NFS

Requirements for Highly Available NFS is as follows:

- two host (PC or Server)

- two set of similar sized storage (identical SATA II disks preferred)

- cluster resource manager : Pacemaker

- cluster messaging : Heartbeat (alternative: Corosync)

- logical volume manager : LVM2

- NFS daemon

- DRBD (Distributed Replicated Block Device)

|

| Distributed Replicated Block Device ( http://www.drbd.org/ ) |

Installation instruction is available at references below, in short :

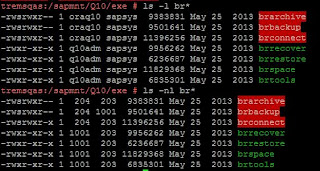

- install DRBD devices (drbd0) on top of physical disks (ex: sda1) : fill in /etc/drbd.d/nfs. Do the same in both host (server)

- configure LVM to ignore physical disk used (ex: sda1). configure LVM to read volumes in DRBD (ex: drbd0), disable LVM cache (fill in /etc/lvm/lvm.conf)

- create LVM Physical Volume (pvcreate), LVM volume group (vgcreate), LVM logical volume (lvcreate). see your favorite LVM tutorial for details.

- install & configure Heartbeat

- install & configure Pacemaker. Pacemaker must be configured to have :

- drbd resource (ocf:linbit:drbd). automatically set DRBD master/slave mode according to situation at hand

- nfs daemon resource (lsb:nfs or lsb:nfs-kernel-server)

- lvm resource (ocf:heartbeat:LVM, ocf:heartbeat:Filesystem)

- nfs exports resource (ocf:heartbeat:exportfs)

- floating ip addr resource (ocf:heartbeat:IPaddr2)

|

| Illustration of two host HA NFS system |

Automatic failover mechanism could be activated to give seamless NFS operation during failover. The advantage of this HA NFS configuration is :

- clients will use old proven NFS interface

- realtime synchronous replication ensure no data loss

The disadvantage of shown configuration is

- no horizontal scalability

- standby capacity of second host is not leveraged

Linbit (www.linbit.com) provides enterprise support for DRBD.

Ceph RADOS

Ceph is a distributed object storage system. Ceph (http://ceph.com/docs/master/) has these features :

- massive clustering with thousands Object Storage Devices (OSD). Ceph could run with minimum 2 OSD.

- automated data replication with per-pool replication settings (ex: metadata : 3x repl, data: 2x repl)

- data striping to improve performance across cluster

- has POSIX filesystem client (CephFS), Openstack Swift compatible interface, and even REST interface

- block device interface (RBD) -> suitable for virtualization, OpenStack cloud support

- horizontal scalability : add more OSD and/or disks for more storage or performance

|

| Illustration of Ceph RADOS cluster |

The disadvantage of Ceph RADOS cluster is :

- new technology, need training and hands on experience to operate

- stability not yet industry-proven, but already seen large deployments

Inktank (http://www.inktank.com/) and Hastexo GmbH (www.hastexo.com) provides enterprise support for Ceph Cluster deployments.

Conclusion

New inexpensive storage systems technology now exists to be leveraged to provide high availability storage and/or performance for our legacy applications that still cluster-unaware.

Comments