Enhancing MySQL Query Performance - July 2015

Background

This post is meant to document steps that I took in July 2015 to fix a MySQL-related performance problem.

Problem Identification

The symptoms is that pages of one application have very large response time. First thing we check is the CPU usage of the application server, which is < 25%, meaning that there are no issue in the application servers' CPU usage. And need to check logical limits also, in this case, Apache's MaxClients.. and compare it with the concurrent HTTP connections to the server. This is also < 25%. The second part we check is the database server. Check the CPU usage.. and gotcha, it was > 70%. With application server have almost no load, this means query executions in the database server were not optimal. Next we check the queries.

MySQL Processlist

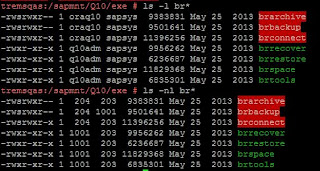

To examine queries running in a MySQL database server, open mysql command line client, and we could check the processlist using :

SHOW FULL PROCESSLIST \G

This should be run as mysql root. But if you only have login to a normal database user, try running show full processlist too. Sometimes we are granted access to see processlist of our own queries.

However, 'Full' is not 'Full' enough, for very long queries the output will be cut. The alternative for modern MySQL servers are the information_schema.processlist table.

SELECT * FROM INFORMATION_SCHEMA.PROCESSLIST

On a busy database server that are serving multiple applications, grouping by user and state would be handy to get a brief overview of current load :

select user,db,state,count(*) from information_schema.processlist group by user,db,state;

The problem that warrants our attention is 'copying to tmp table'. For the July events (yes, there are 2 separate occasion) high number of processes on this state indicates something wrong with the query.

Use this query to find out the full query :

select * from information_schema.processlist where state <> '' and user = 'someuser' and db = 'somedb';

This will get us a snapshot of queries that are currently being run, in which the most often query that pops up would be the query that have the most significant impact in the system at that time.

It is sufficient if we do analysis during the time of the incident. If we need to check what queries being run in the past, we use the AppDynamics for Database product, in which we could select a time range and find out top queries in that period. MySQL slow queries log can also be used as an alternative if such tool are not available, but you need to take into account the frequency and elapsed time of each query by your own.

Verify query runtime

To verify query execution time, use MySQL client to execute select queries. If the runtime is not consistent, like : 0.4 seconds, 10 seconds, 0.3 second, 0.2 second, it may means that the query cache is being used each time we have execution time < 1 seconds. To measure actual query performance, use SQL_NO_CACHE like :

SELECT SQL_NO_CACHE col1,col2 from T1 WHERE ...

Case A

For the first case, the query is a simple select with one join clause. The query is very often executed, its execution drags the database server into a crawl. For each problematic query, it is a good thing to run explain plan first :

EXPLAIN SELECT a.id, a.title, a.description, a.publish_date, a.category_id, b.name, a.upload_date, HOUR(TIMEDIFF(NOW(), a.publish_date)) as hour_dif FROM intra_berita a, intra_user b WHERE a.upload_by = b.id AND status = '3' AND a.category_id = 3 ORDER BY publish_date DESC LIMIT 3;

For this case, the result is that the index related to the where condition is not being used. This problem might be related to the bug https://mariadb.atlassian.net/browse/MDEV-7084, where ANALYZE table must be run manually. Anyway the problem were fixed by running ANALYZE TABLE on the table :

ANALYZE TABLE intra_berita;

Case B

SELECT b.OBJIDPOSISI OBJID_POSISI,t.N_NIK,t.V_NAMA_KARYAWAN,b.V_LONG_POSISI V_SHORT_POSISI,b.V_SHORT_UNIT, b.V_SHORT_DIVISI,b.V_PERSONNEL_SUBAREA,b.V_BAND_POSISI band,(case when plan.peminat is null then 0 else plan.peminat end) peminat,b.pmu PMU

FROM `FT_T_RPT0001` `t` right join (

SELECT tom.OBJIDPOSISI,tom.C_KODE_DIVISI, tom.V_BAND_POSISI, tom.V_LONG_POSISI, tom.V_SHORT_UNIT, tom.V_SHORT_DIVISI, tom.V_PERSONNEL_SUBAREA, pmu.matchup pmu

FROM FT_T_RPTOM tom INNER JOIN FT_T_JOBID_OBJID tjo ON (tjo.OBJID = tom.OBJIDPOSISI

AND tjo.C_TAMPIL = 1) INNER JOIN (

SELECT m.JOBID, m.NILAI_AKHIR matchup

FROM FT_T_PMU_ITEM_SUM_ALL_50 m

WHERE m.NIK='#redacted#' ) pmu ON (pmu.JOBID = tjo.JOBID)

WHERE SUBSTRING(tom.C_KODE_DIVISI,1,3)<>"JVC" ) b on (b.OBJIDPOSISI=t.OBJID_POSISI) LEFT JOIN (

SELECT p.OBJIDPOSISI,COUNT(p.OBJIDPOSISI) peminat

FROM FT_T_CAREER_PLAN p JOIN FT_T_JOBID_OBJID obj on (obj.OBJID=p.OBJIDPOSISI)

GROUP BY p.OBJIDPOSISI ) plan ON (plan.OBJIDPOSISI=b.OBJIDPOSISI)

WHERE b.V_BAND_POSISI IN ('IV', 'V')

ORDER BY b.OBJIDPOSISI desc

LIMIT 10

The second case is more complicated, because it involves nested query and multiple join (4-5 tables in one query). After running ANALYZE TABLE on one of the biggest table (FT_T_PMU_ITEM_SUM_ALL_50), the runtime improves somewhat. But after the data get updated the query still runs in the range of 6-15 seconds.

What we found is two blocks of query that each takes no more than 3 seconds but when joined using LEFT JOIN it becames more than 10 seconds. Changing to JOIN improve the execution time to under 2 seconds. But of course the result is fewer than LEFT JOIN, so it is not a solution.

The workaround is to avoid LEFT JOIN. The purpose of left join is only to obtain one column, 'peminat', which is a grouped row count of FT_T_CAREER_PLAN. So we change the application to do the row counting outside the primary query. We do n queries to only do row counting, simplifying the primary query.

The workaround works nicely turning 8-15 seconds page into 0.5 second page. However there are many queries that use a similar LEFT JOIN clause, which the application team works one-by-one to to remove such clause and converting it into additional query.

Conclusion

Several things could became a factor for MySQL performance problems, one is that table index statistics might not get updated in a timely manner, and the second is that a complicated query could became a burden to both the database and the developer. If I remember correctly, Facebook uses MySQL server (hundreds of them) and could have blazingly fast performance because they never do a JOIN query.

Comments