SAP System Copy Lessons Learned

Background

Earlier this year I was part of a team that does System Copy for a 20 terabyte plus SAP ERP RM-CA System. And just now I am involved in doing two system copy in just over one week, for much lesser amount of data. I think I would note some lessons learned from the experience in this blog. For the record, we are migrating from HP/UX and AIX to Linux x86 platform.

Things that go wrong

First, following the System Copy guide carefully is quite a lot of work - mainly because some important stuff are hidden in references in the guide. And reading a SAP note that are referenced in another SAP note, that are referenced in Installation Guide.. is a bit too much. Let me describe what thing goes wrong.

VM Time drift

The Oracle RAC Cluster have time drift problem, killing one instance when the other is shutting down. The cure for our VMWare-based Linux database server is hidden in SAP Note 989963 "Linux VMWARE Timing", which is basically add a tinker panic 0 in the ntp.conf and removing local undisciplined time source. And I think there are additional kernel parameters if your kernel is not new enough.

Memory usage grows beyond whats being used

Memory usage is very high, given our production cluster hosts so many servers, and thus so many concurrent database connections. Page table overhead is so large that the server is drowning in it. The cure is to enable hugepages in the kernel, allocate enough pages for Oracle SGA as hugepages, and ensuring Oracle could use the Huge Pages. See SAP Note 1672954 "Hugepages for Oracle".

Temporary Sequential (TemSe) Objects

For the QA system copy, the step deleting inconsistencies in TemSe consistency check takes forever, like a whole day, and its not even finished yet. It seems that SAP daily job to purge job logs is never been run before in the server. Our solution is to use report RSBTCDEL2 to delete job logs in time period intervals, like deleting every finished job logs that is over 1000 days old, then over 500 days old. See SAP Note 48400 for information about kinds of objects that are stored in TemSe, and the tcode to purge each kind of them. For example, report RSBTCDEL2 for job logs and RSBDCREO for BDC.

The lessons are, please do purge your job logs in the QA and DEV server. In our PROD system we have 14 days retention for jobs, in QA and DEV, maybe 100 days of retention is enough.

NFS problems

Our former senior Basis confirms that Linux NFS is more problematic than AIX's NFS. Our lessons learned are:

- If it is possible to avoiding NFS access, avoid it. For example, transporting datafiles from source to target. NFS problems delay our process by about 8 hours, which is resolved by obtaining terabytes of storage and copying the data files to local disk via SCP.

- NFS problems occur mostly when the NFS server restarted. Be prepared to restart the NFS service in each client first, and restart client OS if things still doesn't work.

- Recommended NFS mount options for Linux is (ref : SAP Wiki):

rw,bg,hard,[intr],rsize=32768,wsize=32768,tcp,vers=3,suid,timeo=600

Out of disk space in non-database partitions

This is a manifestation of some other problems, like :

- Forgotting to deactivate app server in the system copy process. The dev_w? log files were filled with endless database connection errors after system copy recreates the database.

- Using the wrong directory for export destination.

Out of disk space in sapdata partition

Our database space usage estimation is very much off from what is really needed. The mistake is that we estimate the size from source system database size, meanwhile sapinst would create datafiles with additional space added as safety margin.

In order to correctly measure space, we need to be aware that :

- The sizes in DBSIZE.XML is only for estimating SAP's core tablespaces, and for SYSTEM and PSAPTEMP the estimate is a bit off. For SYSTEM we need additional 800 MB from the DBSIZE.XML in order to pass the ABAP Import phase successfully

- PSAPTEMP size is recommended by SAP in Note 936441 to be 20% from total data size used, which is very different from the estimate in DBSIZE.XML. 20% might be a bit too much for large (>500GB) databases, but if it is too small some large indexes might fail during import. For our case, DBSIZE estimates 10 GB for PSAPTEMP, we need 20 GB to pass ABAP Import phase (by Note 936441 it should be 100 GB though).

- Sapinst will create SAP core tablespace datafiles that might be consuming too much disk space. Be ready to provide larger storage capacity in order to have smooth system copy operation. In our case, we are forced to shrink PSAPSR3 datafiles in order to make room for SYSTEM and PSAPTEMP tablespace.

- The default reserved space for root is 5% for one partition. This is very significant number of wasted space (5% from 600 GB sapdata partition is 30 GB wasted space for example). Some references said that ext2fs performance drops after 95% disk usage, but because sapdata mainly store datafiles with autoextend off then this should not be an issue. For sapdata I change the reserved space to 10000 blocks : tune2fs -r 10000 /x/y/sapdata

Permission issues

We used local linux user for OS authentication and authorization. And there is a need to match uid/guid across the servers in order to make transport via NFS work. We only found minimum clue to this issue in the System Copy guide. Currently our lessons here are :

- need to restart sapinst after changing groups

- saproot.sh is a tool to fix permissions AFTER gid/uid already fixed

- double check that you don't have double entries in /etc/group or /etc/passwd as the result of gid/uid update efforts

- in SUSE that there is nscd service that caches groups, restart the service to update the cache, and you don't need to restart the OS

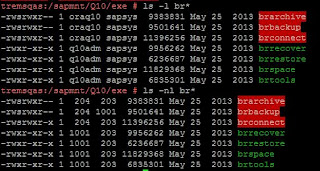

- ls -l sometimes could be confusing. ls -nl is better, because it shows numerical gids.

Performance issues

Migrating to new OS and hardware environment, we face some performance issues. SAP Note that describes some insight on this are Note 1817553 "What to do in case of general performance issues" and Note 853576 "Performance analysis w ASH and Oracle Advisors". Currently our standard operating procedure are like this :

- Execute AWR from ST04 -> Performance -> Wait Event Analysis -> Workload Reporting

- For the AWR, choose begin snapshot and end snapshot of 1/2 an hour where the performance issue occurs

- In the AWR result, Check SQLs with high elapsed time or IO,

- Check the index and tables involved for stale statistics

- Check index storage quality from report RSORAISQN (see note 970538)

- Execute Oracle SQL Tuning on the SQL id (sqltrpt.sql)

- Apply the recommendation

Summary

If you only do dry run once before the real System Copy, be prepared to handle unexpected things during the real process, such as obtaining additional storage. We might avoid some problems by by reading the System Copy guide carefully and reading 'between the lines'.

Comments